publications

An up-to-date list can be found on my Google Scholar. The symbol * denotes joint first-authors.

2025

- Easing Optimization Paths: A Circuit PerspectiveAmbroise Odonnat* , Wassim Bouaziz* , and Vivien CabannesICASSP Oral, 2025.

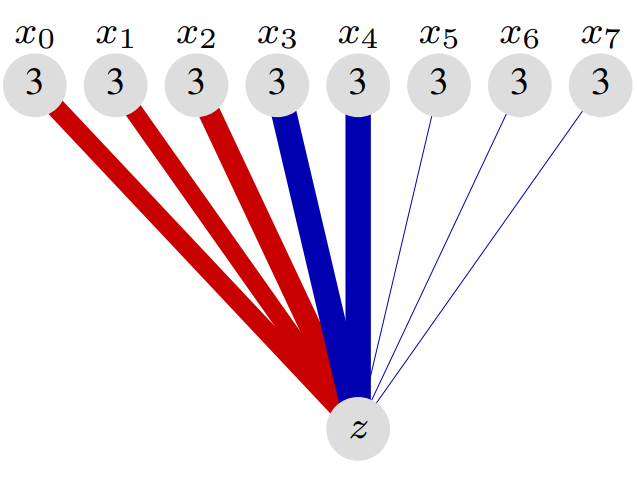

Gradient descent is the method of choice for training large artificial intelligence systems. As these systems become larger, a better understanding of the mechanisms behind gradient training would allow us to alleviate compute costs and help steer these systems away from harmful behaviors. To that end, we suggest utilizing the circuit perspective brought forward by mechanistic interpretability. After laying out our intuition, we illustrate how it enables us to design a curriculum for efficient learning in a controlled setting. The code is available at https://github.com/facebookresearch/pal.

- Zero-shot Model-based Reinforcement Learning using Large Language ModelsAbdelhakim Benecheab , Yousseph Attia El Hili , Ambroise Odonnat , and 6 more authorsICLR, 2025.

The emerging zero-shot capabilities of Large Language Models (LLMs) have led to their applications in areas extending well beyond natural language processing tasks. In reinforcement learning, while LLMs have been extensively used in text-based environments, their integration with continuous state spaces remains understudied. In this paper, we investigate how pre-trained LLMs can be leveraged to predict in context the dynamics of continuous Markov decision processes. We identify handling multivariate data and incorporating the control signal as key challenges that limit the potential of LLMs’ deployment in this setup and propose Disentangled In-Context Learning (DICL) to address them. We present proof-ofconcept applications in two reinforcement learning settings: model-based policy evaluation and data-augmented off-policy reinforcement learning, supported by theoretical analysis of the proposed methods. Our experiments further demonstrate that our approach produces well-calibrated uncertainty estimates. We release the code at https://github.com/abenechehab/dicl.

2024

- SKADA-Bench: Benchmarking Unsupervised Domain Adaptation Methods with Realistic Validation On Diverse ModalitiesYanis Lalou* , Théo Gnassounou* , Antoine Collas* , and 6 more authorsPreprint, 2024.

Unsupervised Domain Adaptation (DA) consists of adapting a model trained on a labeled source domain to perform well on an unlabeled target domain with some data distribution shift. While many methods have been proposed in the literature, fair and realistic evaluation remains an open question, particularly due to methodological difficulties in selecting hyperparameters in the unsupervised setting. With SKADA-bench, we propose a framework to evaluate DA methods on diverse modalities, beyond computer vision task that have been largely explored in the literature. We present a complete and fair evaluation of existing shallow algorithms, including reweighting, mapping, and subspace alignment. Realistic hyperparameter selection is performed with nested cross-validation and various unsupervised model selection scores, on both simulated datasets with controlled shifts and real-world datasets across diverse modalities, such as images, text, biomedical, and tabular data. Our benchmark highlights the importance of realistic validation and provides practical guidance for real-life applications, with key insights into the choice and impact of model selection approaches. SKADA-bench is open-source, reproducible, and can be easily extended with novel DA methods, datasets, and model selection criteria without requiring re-evaluating competitors.

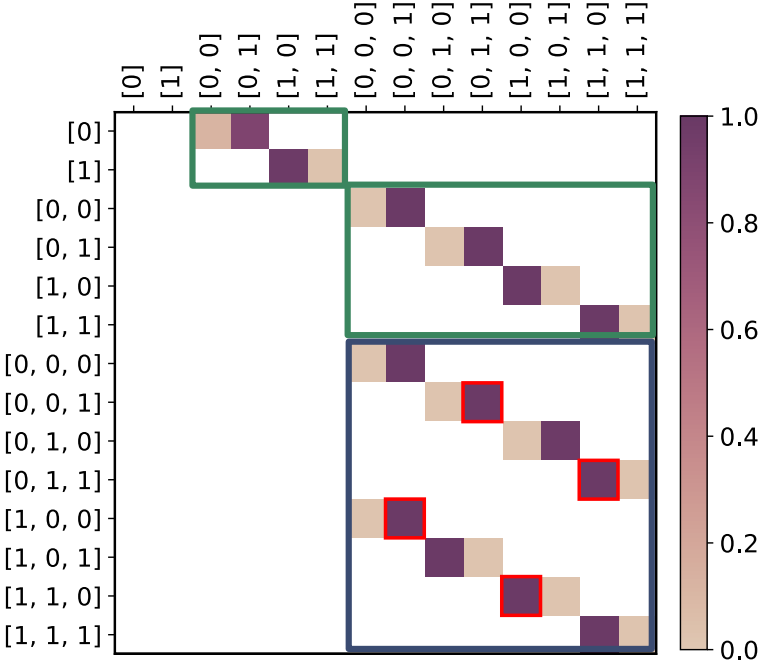

- Clustering Head: A Visual Case Study of the Training Dynamics in TransformersAmbroise Odonnat , Wassim Bouaziz , and Vivien CabannesPreprint, 2024.

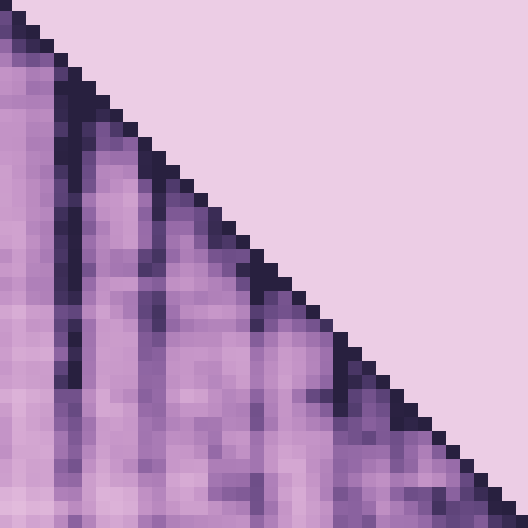

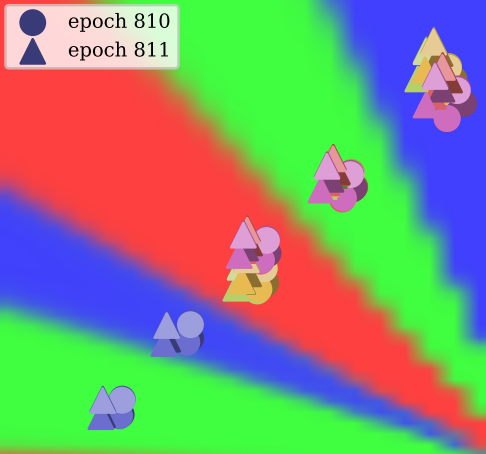

This paper introduces a visual sandbox designed to explore the training dynamics of a small-scale transformer model, with the embedding dimension constrained to d = 2. This restriction allows for a comprehensive two-dimensional visualization of each layer’s dynamics. Through this approach, we gain insights into training dynamics, circuit transferability, and the causes of loss spikes, including those induced by the high curvature of normalization layers. We propose strategies to mitigate these spikes, demonstrating how good visualization facilitates the design of innovative ideas of practical interest. Additionally, we believe our sandbox could assist theoreticians in assessing essential training dynamics mechanisms and integrating them into future theories. The code is available at https://github.com/facebookresearch/pal.

- Large Language Models as Markov ChainsOussama Zekri* , Ambroise Odonnat* , Abdelhakim Benecheab , and 3 more authorsPreprint, 2024.

Large language models (LLMs) have proven to be remarkably efficient, both across a wide range of natural language processing tasks and well beyond them. However, a comprehensive theoretical analysis of the origins of their impressive performance remains elusive. In this paper, we approach this challenging task by drawing an equivalence between generic autoregressive language models with vocabulary of size T and context window of size K and Markov chains defined on a finite state space of size O(T^K). We derive several surprising findings related to the existence of a stationary distribution of Markov chains that capture the inference power of LLMs, their speed of convergence to it, and the influence of the temperature on the latter. We then prove pre-training and in-context generalization bounds and show how the drawn equivalence allows us to enrich their interpretation. Finally, we illustrate our theoretical guarantees with experiments on several recent LLMs to highlight how they capture the behavior observed in practice.

- Analysing Multi-Task Regression via Random Matrix Theory with Application to Time Series ForecastingRomain Ilbert , Malik Tiomoko , Cosme Louard , and 4 more authorsNeurIPS Spotlight, 2024.

In this paper, we introduce a novel theoretical framework for multi-task regression, applying random matrix theory to provide precise performance estimations, under high-dimensional, non-Gaussian data distributions. We formulate a multi-task optimization problem as a regularization technique to enable single-task models to leverage multi-task learning information. We derive a closed-form solution for multi-task optimization in the context of linear models. Our analysis provides valuable insights by linking the multi-task learning performance to various model statistics such as raw data covariances, signal-generating hyperplanes, noise levels, as well as the size and number of datasets. We finally propose a consistent estimation of training and testing errors, thereby offering a robust foundation for hyperparameter optimization in multi-task regression scenarios. Experimental validations on both synthetic and real-world datasets in regression and multivariate time series forecasting demonstrate improvements on univariate models, incorporating our method into the training loss and thus leveraging multivariate information.

- MANO: Exploiting Matrix Norm for Unsupervised Accuracy Estimation Under Distribution ShiftsRenchunzi Xie* , Ambroise Odonnat* , Vasilii Feofanov* , and 3 more authorsNeurIPS, 2024.

Estimating test accuracy without access to the ground-truth test labels under varying test environments is a challenging, yet extremely important problem in the safe deployment of machine learning algorithms. Existing works rely on the information from either the outputs or the extracted features of neural networks to formulate an estimation score correlating with the ground-truth test accuracy. In this paper, we investigate–both empirically and theoretically–how the information provided by the gradients can be predictive of the ground-truth test accuracy even under a distribution shift. Specifically, we use the norm of classification-layer gradients, backpropagated from the cross-entropy loss after only one gradient step over test data. Our key idea is that the model should be adjusted with a higher magnitude of gradients when it does not generalize to the test dataset with a distribution shift. We provide theoretical insights highlighting the main ingredients of such an approach ensuring its empirical success. Extensive experiments conducted on diverse distribution shifts and model structures demonstrate that our method significantly outperforms state-of-the-art algorithms.

- SAMformer: Unlocking the Potential of Transformers in Time Series Forecasting with Sharpness-Aware Minimization and Channel-Wise AttentionRomain Ilbert* , Ambroise Odonnat* , Vasilii Feofanov , and 4 more authorsICML Oral, 2024.

Transformer-based architectures achieved breakthrough performance in natural language processing and computer vision, yet they remain inferior to simpler linear baselines in multivariate long-term forecasting. To better understand this phenomenon, we start by studying a toy linear forecasting problem for which we show that transformers are incapable of converging to their true solution despite their high expressive power. We further identify the attention of transformers as being responsible for this low generalization capacity. Building upon this insight, we propose a shallow lightweight transformer model that successfully escapes bad local minima when optimized with sharpness-aware optimization. We empirically demonstrate that this result extends to all commonly used real-world multivariate time series datasets. In particular, SAMformer surpasses the current state-of-the-art model TSMixer by 14.33% on average, while having 4 times fewer parameters. The code is available at https://github.com/romilbert/samformer.

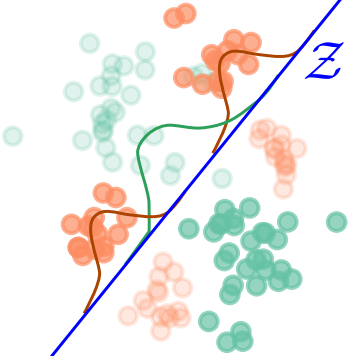

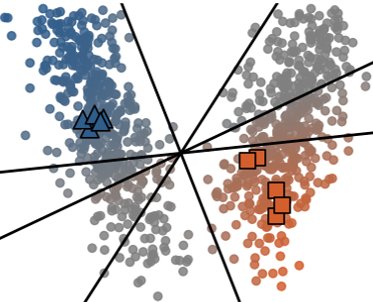

- Leveraging Ensemble Diversity for Robust Self-Training in the Presence of Sample Selection BiasAmbroise Odonnat , Vasilii Feofanov , and Ievgen RedkoAISTATS, 2024.

Self-training is a well-known approach for semi-supervised learning. It consists of iteratively assigning pseudo-labels to unlabeled data for which the model is confident and treating them as labeled examples. For neural networks, softmax prediction probabilities are often used as a confidence measure, although they are known to be overconfident, even for wrong predictions. This phenomenon is particularly intensified in the presence of sample selection bias, i.e., when data labeling is subject to some constraints. To address this issue, we propose a novel confidence measure, called \mathcalT-similarity, built upon the prediction diversity of an ensemble of linear classifiers. We provide the theoretical analysis of our approach by studying stationary points and describing the relationship between the diversity of the individual members and their performance. We empirically demonstrate the benefit of our confidence measure for three different pseudo-labeling policies on classification datasets of various data modalities. The code is available at https://github.com/ambroiseodt/tsim.

- Leveraging Gradients for Unsupervised Accuracy Estimation under Distribution ShiftRenchunzi Xie , Ambroise Odonnat , Vasilii Feofanov , and 3 more authorsPreprint, 2024.

Estimating test accuracy without access to the ground-truth test labels under varying test environments is a challenging, yet extremely important problem in the safe deployment of machine learning algorithms. Existing works rely on the information from either the outputs or the extracted features of neural networks to formulate an estimation score correlating with the ground-truth test accuracy. In this paper, we investigate–both empirically and theoretically–how the information provided by the gradients can be predictive of the ground-truth test accuracy even under a distribution shift. Specifically, we use the norm of classification-layer gradients, backpropagated from the cross-entropy loss after only one gradient step over test data. Our key idea is that the model should be adjusted with a higher magnitude of gradients when it does not generalize to the test dataset with a distribution shift. We provide theoretical insights highlighting the main ingredients of such an approach ensuring its empirical success. Extensive experiments conducted on diverse distribution shifts and model structures demonstrate that our method significantly outperforms state-of-the-art algorithms.

2022

- Detection of interictal epileptiform discharges on EEG and MEGAmbroise Odonnat , Konstantinos Nasiotis , Eleanor Hill , and 7 more authorsBest Flash Talk, QBIN Scientific Day, 2022.

Epilepsy is the fourth most common neurological disorder in the world. It affects the central nervous system, leading to abnormal brain activity which causes seizures that are sometimes accompanied by loss of consciousness. Electroencephalography (EEG) and magnetoencephalography (MEG) recordings contain patterns of abnormal brain activity such as interictal epileptiform discharges (IEDs), also known as spikes, that aid in the diagnosis of epilepsy and the identification of the epileptogenic zone. Due to the long recording time, high number of channels, and their noisy nature such recordings are tedious and time consuming to manually analyze. These challenges have motivated the development of automated methods to detect epileptic spikes. Convolutional and Recurrent Neural Networks are among the most frequently chosen architectures for their feature extraction capacities. However, both methods have limited global dependencies perception and recurrent networks lack efficiency because the steps cannot be parallelized. We developed a model based on a transformer architecture. Transformers are a breakthrough in the fields of Natural Language Processing and Computer Vision. They rely on a self-attention mechanism, which enables to differentially weight the significance of each part of the input data enhancing relevant ones while diminishing others. The aim of our framework is to detect spikes on EEG and/or MEG signals while being agnostic to the number of channels. First, the raw data is preprocessed (artifact removal, notch filter, etc.) and split into 2-second segments called ‘trials’ using the open-source Brainstorm software (https://neuroimage.usc.edu/brainstorm/). Spatial filtering is performed before applying the attention mechanism on the feature-channel dimension to focus on relevant channels. Then, embeddings of each time point are created before entering a transformer encoder to perceive global temporal dependencies. The output of the encoder is a highly distinguishable representation of the trial containing spatial and temporal information. The last block is composed of two fully-connected layers separated by a Mish activation function. It splits the data into 10 time windows and gives the probability of presence of a spike for each time window. Preliminary experiments were conducted on a single pediatric participant. A repeated 5-fold cross-validation strategy was used for training and validation. The first experimental results are promising with an accuracy of 90% and a F1-score of 70%. Our model has good potential for spatial and temporal features learning on EEG and MEG signals. The next step is to perform cross-subject spike detection to have a robust framework usable in real-world situations.