Ambroise Odonnat

In front of TUM in Munich

I am a second-year Ph.D. student in Paris between Huawei Noah’s Ark Lab & Inria supervised by Romain Tavenard, Laetitia Chapel, and Ievgen Redko.

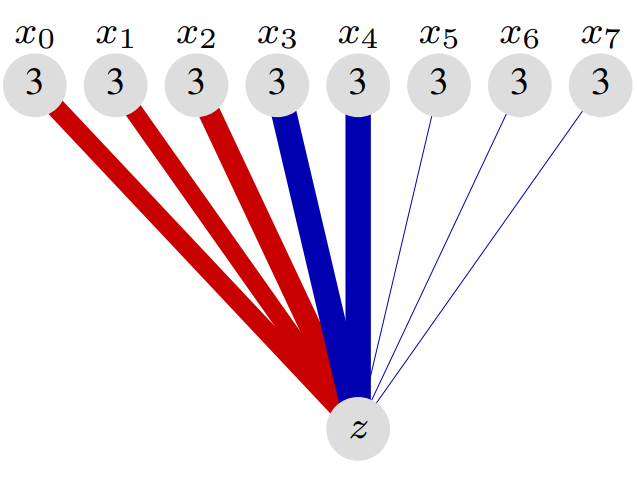

I am interested in better understanding transformers by conducting theoretical study and large-scale experiments on:

- Large language models (e.g., here and here)

- Transformers training and finetuning (e.g., here and here)

- Out-of-distribution generalization (e.g., here, here and here)

- Vision transformers and time Series forecasting (see here)

I was lucky to receive an ICML Oral Award, an ICASSP Oral Award, and a QBIN Best Flash Talk Award for my research in these areas. On a more amusing (and surprising 🙃) note, one of my recent articles was featured in Forbes.

I enjoy working both with a few collaborators and as part of a larger team, contributing to open-source libraries and communicating about my research. I maintain a research blog, logB, and have had the privilege to present my research at leading institutions such as EPFL, Cohere, ENS Ulm, and Criteo.

I graduated from Ecole des Ponts ParisTech in 2023 and hold a master’s degree from ENS Paris-Saclay in Mathematics, Vision, and Machine Learning (MVA).

Don’t hesitate to reach out for possible collaborations or questions regarding my research!

news

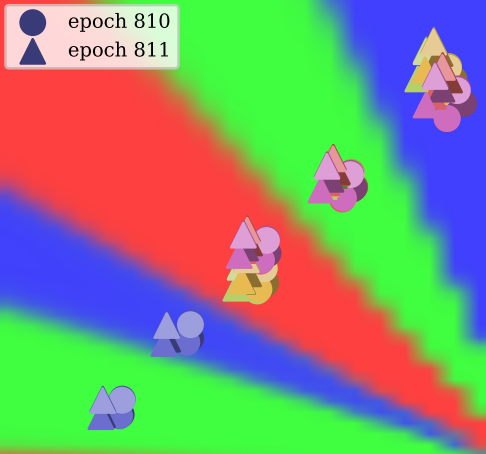

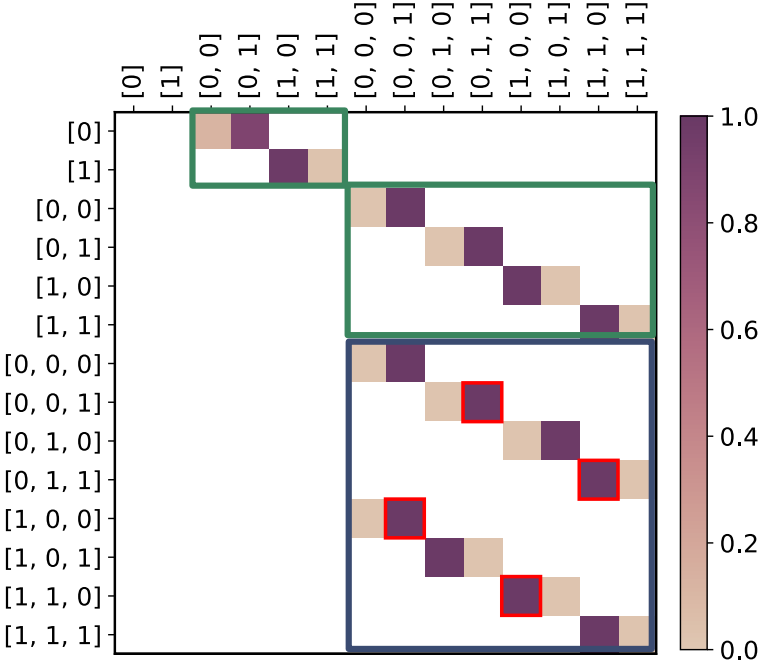

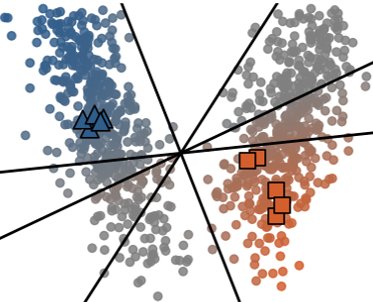

| Jan 30, 2025 | 📑 New preprint on the training dynamics in Transformers: Clustering Heads. |

|---|---|

| Jan 22, 2025 | 🥳 DICL was accepted @ICLR 2025. |

| Dec 18, 2024 | 🥳 Easing Optimization Paths: A Circuit Perspective was accepted @ICASSP 2025. |

| Nov 12, 2024 | 🎉 Very happy to see Large Language Models as Markov Chains featured in Forbes! |

| Oct 02, 2024 | 📑 New preprint: Large Language Models as Markov Chains. |